Randomized Control Trial (RCT): studies that have a randomized treatment and control group. Quasi-experimental studies: studies that have a non-randomized treatment and control group Correlation studies: studies that look at the correlation between two data-sets

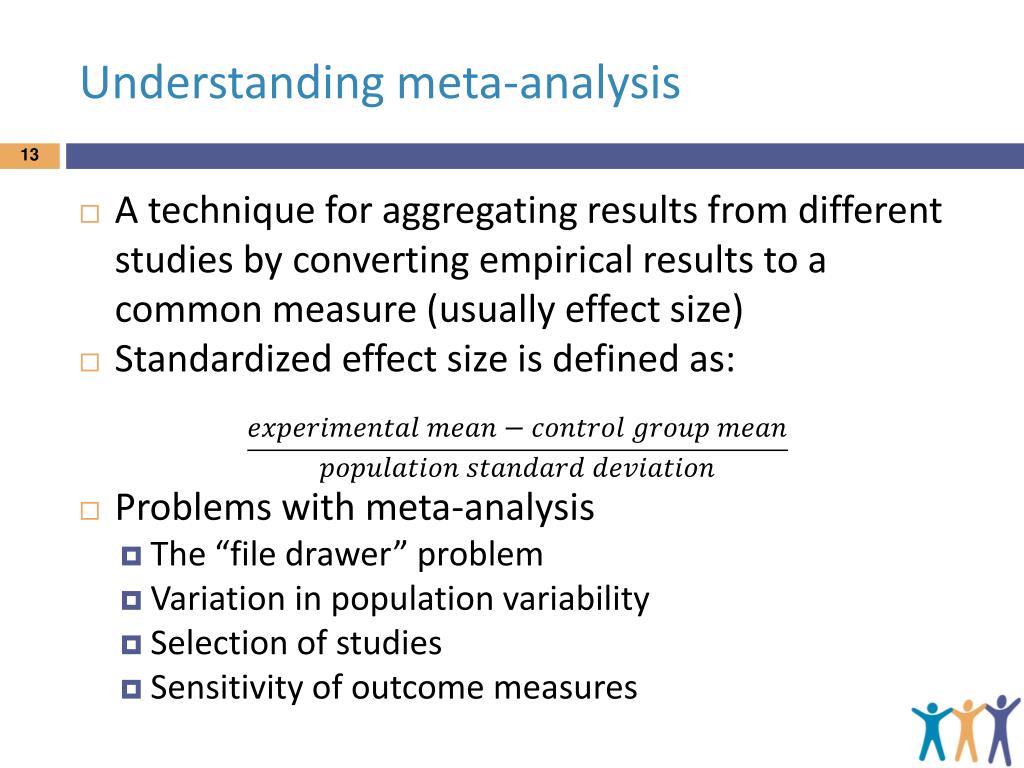

Case studies: studies without control groups or done retrospectively Quality: There are typically 4 main types of studies included in a meta-analysis. Let’s dive into The Main Criticism of Meta-Analysis:ġ. Meta-analysis tends to show random statistical results, not meaningful results Meta-analysis makes apples to oranges comparisons There are scholars who object to meta-analysis and they usually cite three main arguments: Which is why most reading researchers today recommend systematic phonics instruction, as part of a comprehensive literacy program. Their research showed systematic phonics has a mean effect size of. Most famously, the National Reading Panel conducted multiple meta-analyses, including one that compared systematic phonics and whole language instruction. Over the last two decades meta-analyses have been crucial in helping to determine what is best practice in literacy instruction. This is especially important in education research, because scientific results tend to be more variable, and experiments are often carried out by those selling pedagogical products. By using meta-analysis, we can be sure whether or not a finding has been well replicated. A scientific finding is only truly valid if it can be consistently replicated. Meta-analysis also serves a fundamental scientific principle, replication. Ideally, this removes as much bias as possible and provides an interpretation of the most normalized result on a topic. The author must review all studies, and then systematically synthesize quantitative results. Looking at research through meta-analysis is the most systematic way of examining research. I tend to think of a meta-analysis as a literature review with receipts. Typically meta-analysis results are displayed in effect sizes, an equation that seeks to create a standardized mean difference, so that we can compare multiple studies with each other.

This can be problematic, because it tends to be purely qualitative and the researcher gets to present their interpretation, without being beholden to any kind of quantitative data.Ī meta-analysis is similar to a literature review, except the authors also find the average statistical result for studies on a topic. With this approach, a researcher reads all of the studies on a topic and then writes about their findings. In the past, researchers would complete systematic literature reviews to discover the scientific consensus on a topic. This is problematic, because it assumes the newest study is always the most correct, rather than looking to see what the majority of research shows. The media tends to report on each new landmark study, as if it stands in a vacuum, as the sole edict, to what science proves. I don’t know what to think”? This is a common conceptualization for science and it stems from the media poorly reporting on new research. Have you ever heard someone say something to the effect of, “One week scientists say eggs are good for you, the next week they say they’re bad. High quality meta-analyses offer a more transparent and rigorous model for determining best practice in education. Scholars who try to select the best RCTs are likely to select RCTs that confirm their bias. Education is a social science and variability is inevitable. However, education RCTs do not show consistent findings, even when all factors are controlled for. Critics of meta-analysis often suggest that selecting high quality RCTs is a more valid methodology. However, high quality meta-analyses correct for all of these problems. All of these criticisms are valid for low quality meta-analyses.

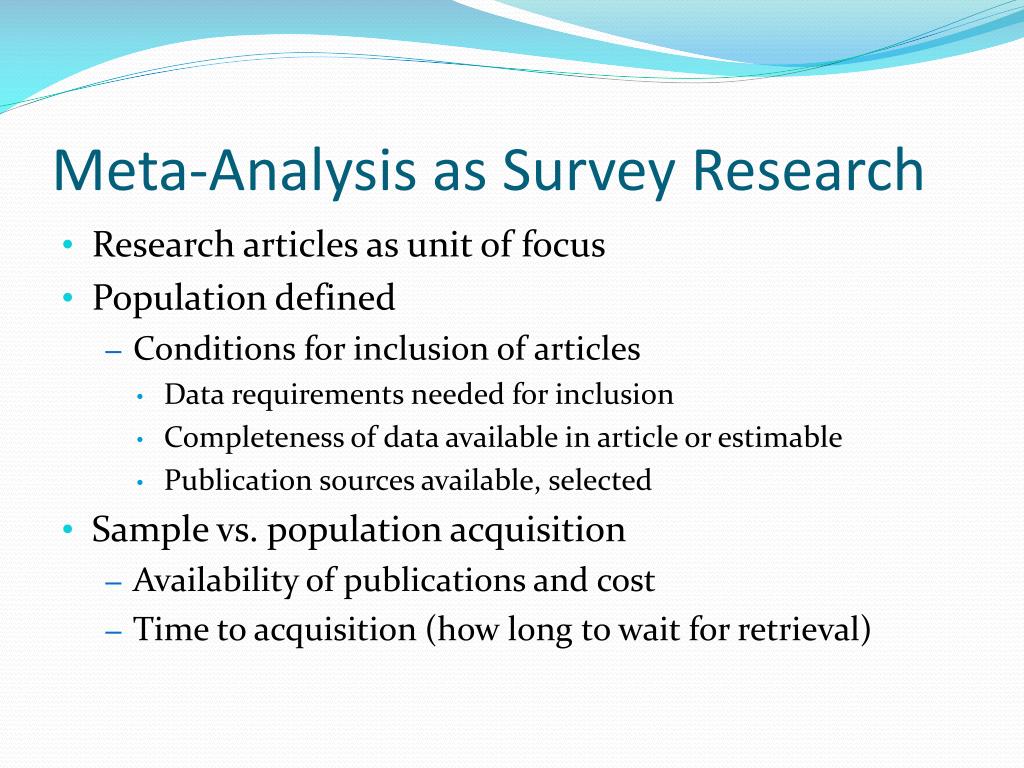

Critics of meta-analysis point out that such analyses can conflate the results of low and high quality studies, make improper comparisons, and result in statistical noise. Meta-analyses are systematic summaries of research that use quantitative methods to find the mean effect size (standardized mean difference) for interventions.

0 kommentar(er)

0 kommentar(er)